Additional Broadridge resources:

View our Contact Us page for additional information.

One of our sales representatives will email you about your submission.

Welcome back, {firstName lastName}.

Not {firstName}? Clear the form.

Want to speak with a sales representative?

Your sales rep submission has been received. One of our sales representatives will contact you soon.

Our representatives and specialists are ready with the solutions you need to advance your business.

Want to speak with a sales representative?

| Table Heading | |

|---|---|

| +1 800 353 0103 | North America |

| +442075513000 | EMEA |

| +65 6438 1144 | APAC |

Your sales rep submission has been received. One of our sales representatives will contact you soon.

Want to speak with a sales representative?

| Table Heading | |

|---|---|

| +1 800 353 0103 | North America |

| +442075513000 | EMEA |

| +65 6438 1144 | APAC |

Nos représentants et nos spécialistes sont prêts à vous apporter les solutions dont vous avez besoin pour faire progresser votre entreprise.

Vous voulez parler à un représentant commercial?

| Table Heading | |

|---|---|

| +1 800 353 0103 | Amérique du Nord |

| +1 905 470 2000 | Canada Markham |

| +1 416 350 0999 | Canada Toronto |

Votre soumission a été reçue. Nous communiquerons avec vous sous peu.

Vous souhaitez parler à un commercial ?

| Table Heading | |

|---|---|

| +1 800 353 0103 | Amérique du Nord |

| +1 905 470 2000 | Canada Markham |

| +1 416 350 0999 | Canada Toronto |

The COVID-19 crisis demonstrated how important data has become to asset managers. At the start of the pandemic, managers faced a monumental task in simply maintaining regular business operations and transaction flows amid the disruptions. Once that immediate threat was handled, managers had to make critical strategic decisions about how to position their organizations to weather the crisis. Data management was at the center of both efforts.

“The past 12 months were a tremendous reminder that when volatility and uncertainty rises, the timeframe for significant business decisions compresses, and you have less time than you think,”

Asset managers that had made early investments in data infrastructure were positioned to make better and faster decisions in this high-pressure environment because they were able to get essential data into the hands of investment teams, client service professionals and other staff.

That experience has not been lost on senior management teams, some of whom were questioning the ROI on data initiatives before the pandemic. Today, many asset management firms are in the midst of wide-ranging overhauls designed to integrate data and data analytics into their workflows and investment processes.

These undertakings are aimed at radically enhancing the value firms extract from data by systemizing and reducing the amount of time and resources spent on its collection, processing and validation. “Reconciling data today requires too many processes and too much complexity,” says Jesse Robinson, Head of Investment & Client Data Services at Lord, Abbett & Co. “The efficiencies from simplification are what’s going to help drive the creation of future solutions and operating models.”

Asset managers engaged in these data management projects have taken important first steps toward an ultimate destination: the Single Source of Truth (SSOT). The Holy Grail of the data industry, the SSOT represents a universal “master record” that ensures all constituencies within an organization have timely access to data that is 100% accurate, up-to-date and consistent. As Lord Abbett’s Robinson describes it, the SSOT delivers data that is “locked in and locked down,” with no need for any additional review or confirmation. That pristine data can be safely fed to powerful artificial intelligence applications to revolutionize operational functions, investment processes and business decision-making.

The asset management industry is far from this data utopia but has started its long journey toward the SSOT. In this paper, we will trace that journey, recounting the progress made to date, assessing the state of the industry’s data integration as it stands today, and providing a glimpse of how data will continue to evolve into perhaps the industry’s most valuable asset.

The COVID-19 crisis presented asset management data professionals with an unprecedented challenge: providing employees working from home with the data they needed to do their jobs, while also preserving data security, compliance and integrity. According to the industry experts interviewed for this report, that sometimes meant putting carefully planned data projects on hold until workers returned to a more secure office environment. In other cases, the pandemic prompted firms to accelerate plans already in the works in order to meet organizational needs in the crisis. In particular, many firms speeded the roll-out of artificial intelligence applications and the automation of business processes known in the industry as RPA, or robotic process automation.

As the world settles into a new normal, asset managers can return to more deliberate planning of their data management strategies. But managers cannot afford to move too slowly. Firms that lag rivals in data analytics risk falling behind in key measures like operational efficiency, client service and satisfaction, and potentially investment performance. “Data is the new oil for asset managers,” says Lord, Abbett’s Jesse Robinson “Those who manage data most effectively will lead in creating new solutions for clients and drive successful business outcomes.”

“Data is the new oil for asset managers. Those who manage data most effectively will lead in creating new solutions for clients and drive successful business outcomes.”

In more immediate terms, financial service firms of all types face a fast-approaching deadline for many data initiatives, especially those focused on operational efficiency. That deadline is T+1. As Uday Singh, Head of Professional Services, Broadridge Financial Solutions, explains, the coming move to T+1 will be different than past compressions of the trade STATE OF THE DATA TODAY settlement cycle. The reason: In prior moves to T+3 and T+2, asset managers and other financial market participants could adapt to the shorter cycle times by throwing more resources and bodies at existing manual processes. In a 24-hour settlement cycle, firms will not be able to get by with speeding up legacy manual systems. “The move to T+1 will require automation throughout the trade lifecycle,” says Singh. “Across the organization, most manual inputs or interventions will have to be eliminated, and processes automated.” That automation will require data. Lots of data. The DTCC, ICI and SIFMA are working on the steps to move to T+1. Asset managers will have to prepare for that transition while simultaneously managing operational and datamanagement challenges posed by other fast-moving industry developments like the replacement of LIBOR and changes to regulatory reporting rules.

With the global pandemic receding, asset management data professionals can turn their attention back to one of their highest-priority assignments: integrating environmental, social and governance (ESG) data into core operational systems.

In and of itself, the incorporation of ESG data into existing data platforms does not pose any particularly difficult challenges. “It’s just a question of setting it up within the data structure and tagging it appropriately,” says one data professional.

What might be causing problems for data teams, however, are rapidly increasing demands from investment teams for more and expanded ESG data. In general, investment teams have a seemingly endless appetite for new data. That’s especially the case with ESG. The lack of sufficient issuer data remains a significant challenge for asset managers working to integrate ESG into their investment processes. Not only do data professionals have to supply analysts and portfolio managers with increasing amounts of ESG data, they have to do so in a way that doesn’t disrupt or slow down the investment process. That requires a lot of work—most of which occurs behind closed doors and out of sight of the investment teams and, often, the rest of the firm. That challenge is being exacerbated by investment teams’ use of an expanding and constantly changing roster of ESG data providers.

The ultimate goal of the data teams is to provide the investment function with a single, comprehensive repository that alleviates the need for investment teams to search through multiple systems and sources to get the data they need, while keeping data management in the hands of the central data unit. “The end user should feel like he’s shopping on Amazon,” explains Adam Brohm, Global Investment Data Management/ Global Process Owner at Vanguard. “I’d like these six pieces of data. The user puts the data into the cart and walks away.”

Asset managers can ensure their investment teams receive that type of service by first focusing on the fundamentals of data management, infrastructure and controls. Once a firm builds a good foundation based on basic security reference data, security transactions, ratings and other traditional data points, it can begin layering on new data like ESG. “It’s like making the sausage,” Brohm says. “You don’t want to see the messy process that gets you the data, you just want the data.”

Will the industry be ready? In general, the asset management organizations with the biggest technology budgets are best prepared. However, even some large organizations have struggled with the complexity of data integration. One expert explained that at some firms, there exists “a pervasive disconnect between where the executives thought they would be on their data journey, and where they actually are.” Other data professionals cite examples of several major firms that invested millions of dollars to build internal data programs and infrastructure, but are now pivoting and outsourcing the entire effort to external platforms.

Despite this uneven progress, one would be hard-pressed to find an asset manager in the United States that is not utilizing data analytics in at least some capacity. As a result of these efforts, asset managers are realizing “positive cascading contributions across the entire business lifecycle from data management,” says Chris Fedele VP, GTO Regulatory Management and Strategy at Broadridge.

Perhaps the most obvious payoff from data so far have been vast operational efficiency gains, many of which will be essential in the move to T+1. Matt Sweeney, Director of Investment Operations for MFS Investment Management, says asset managers have saved “thousands and thousands” of man hours through the use of intelligent automation systems. These systems can be applied to any basic operation. In fact, once a firm builds a framework for this relatively simple automation process, it can be applied to many different functions.

Another industry data professional says his firm employs more than 50 of these applications, called “bots.” At his firm, bots are used in functions such as client reporting and the creation of marketing materials. Across the industry, this technology is being employed broadly for data inputs and reconciliations, and is also being used for other applications like monitoring shareholder trades.

Achieving benefits from automation requires careful planning. MFS’ Sweeney says his firm embarked on a painstaking examination of every area and function across the organization, itemizing all manual processes and assessing the potential for automation. In each case, they asked two questions: 1) How automatable is the process? and 2) Are we comfortable automating from a risk perspective? The firm then ranked the processes to see which automation projects would “give us the biggest bang for the buck on the risk side, and the efficiency side,” he says.

At the moment, operational enhancements like these are the primary focus for many firms, and experts say that across the industry there are still many more efficiency improvements yet to come. However, for early adopters of data analytics, much of the lowest-hanging automation fruit has been picked. For these managers, the goal of eliminating work hours in operational processes is becoming secondary to other objectives like enhancing risk management and decision making. “We’re looking more at risk reduction, as opposed to headcount reduction,” says MFS’ Sweeney. As examples he cites the firm’s work deploying automated systems that review trading and portfolio management processes to flag issues that warrant human attention.

The industry’s increasing reliance on data analytics is creating a new challenge: the massive proliferation of data. The rapid increase in the number of data streams and quantity of data used by asset managers is forcing firms to spend huge amounts of time acquiring, processing and distributing data.

“No one in the asset management industry is running into problems from a lack of data,” says Prudential’s Michael Herskovitz. “Rather, the task facing data professionals is figuring out how to identify the valuable data streams from the massive amount of data firms have on hand.”

It’s not just the amount of data that’s a problem—it’s also the fact that data exists in so many places within organizations. Asset managers, like many financial service companies, sometimes run on somewhat disjointed legacy IT systems, frequently the result of past acquisitions and mergers. Within these systems, data can reside in multiple silos. Often, this replicated data is created by employees in the course of normal job functions. This problem has actually been exacerbated by innovation. Individuals or groups with more storage and capabilities can more easily capture and manipulate their own data, isolating themselves from the broader organization. The issue reached something of a zenith during COVID-19, when professionals working from home took creative steps to access and store data. Asset managers are also bringing in more data from custodians, transfer agents and other external providers and merging it with their own proprietary data, often creating separate data streams that exist in parallel with other internal sources.

Due to these and other factors, small variations in data persist across organizations. There are an infinite variety of potential inconsistencies that, once introduced into the system, can be difficult to root out. For example, within one firm, a company might be listed under slightly different names, appearing in one place by its acronym and in other with its name spelled out. Other data might be accurate when it’s introduced, but becomes out of date because it’s stored in a personal computer or team network that’s not connected to the central system.

Although some of these differences seem minor, they can create major risks. Faulty or out-of-date data can create errors in both operational processes and decision making, exposing the firm to both financial and regulatory consequences. The proliferation of data in multiple sites also increases the risk of a manager running afoul of data privacy regulations.

To address these problems, asset managers are turning to machine learning and artificial intelligence (MLAI) tools that help find and eliminate data inconsistencies. As Vanguard’s Adam Brohm explains, firms with multisource data structures “can layer MLAI over the top. The machine starts to learn and identify inconsistencies, instead of people having to comb through themselves.” However, establishing a truly reliable and consistent data stream requires more than targeted technology fixes. To avoid risks and capture the full value of data analytics, asset managers need to start at the top by committing to a comprehensive data management and governance policy for the entire organization.

Although cyberattacks and data breaches represent dire risks for financial service firms, hacks are not the only danger that keep data professionals up at night. Data professionals live in a state of constant concern that someone, somewhere in their organizations will make a decision based on bad data that results in significant economic and/or regulatory consequences. That risk is compounded in the case of “unknown errors,” in which no one realizes that the data informing a decision was faulty, and consequences play out unnoticed. For that scenario to arise, data doesn’t need to be wrong. In many cases, it’s just out of date. “We constantly worry about stale data going into risk models or the decision-making process,” says one data professional.

Managing these risks is a precarious balancing act requiring preventative controls that minimize opportunities for errors, monitoring tools that identify errors when they occur, and a resolution process. The resolution process itself must be monitored as it can introduce new risks. “Any time you have manual intervention you’re open to something going really wrong,” says Prudential’s Michael Herskovitz. “Human error can introduce changes that go unnoticed.”

Sophisticated solutions like artificial intelligence, machine learning and robotic process automation will only deliver on their promise if they are fed data with sound data lineage— meaning that the firm has 100% confidence in the validity of the data because it understands where the data came from, how it moved through the organization and if or how it was altered.

That level of certainty cannot be achieved by applying tactical solutions to weed out data inconsistencies. Firms will only be positioned to unlock the full value of data analytics if they build their data operations on the foundation of a comprehensive data governance and management strategy.

One of the most important components of a data governance program is a process for cataloging and normalizing data across the firm. In industry parlance, this is sometimes known as master data management (MDM). The central goal of MDM is to establish universal data categories or common data dictionary, and sort every bit of data into the right place in that framework.

It’s impossible to overstate what a huge effort this is in a large organization. “It sounds so easy: We’ll just take all our data and databases and give them common definitions,” says Broadridge’s Chris Fedele. “But that’s not the reality. It takes a lot of work to get it done.”

Even something as seemingly simple as securities data can get complicated quickly. Although there are common fields for securities data across the industry, individual firms have almost always introduced some unique internal nomenclature. And those proprietary fields exist for a reason. Since they were created for some business purpose, they can’t just be arbitrarily eliminated. Working together, the data team and the business owners must review every item and decide on a compromise that will not only work for that group, but will also fit into the universal dictionary. “It’s a laborious process and one that consumes an extraordinary amount of time,” Fedele says, “but ultimately it’s well worth it.”

Creating a data governance strategy is one thing. Actually deploying that strategy is another. Implementing a data governance or MDM program is hard because organizations are not starting from scratch. Since the data are already being utilized by functions and teams across the organization, establishing a new central governance policy can be like rebuilding an engine while its running.

Many asset managers are in the midst of just this type of firmwide data governance/MDM initiative. Often, these managers will use mandatory systems upgrades or transitions as opportunities to revisit data management policies. For example, one large asset manager decided to switch from its legacy, proprietary trading platform to a third-party platform. The organization saw it as “the perfect opportunity to build a new system to establish data consistency throughout the organization,” says one data professional at the firm. “It’s kind of the search for the golden source, the golden record,” he says. Other firms have adopted similar strategies, including one major asset management firm that decided to use the migration of its data centers as a “discovery mechanism” to get visibility into “what data its teams were using and how they were using it, and to catalog all data in a central repository,” according to one source at the firm.

These data harmonization efforts provide an opportunity to think more strategically about how an organization organizes its data, how it staffs various operational functions, and how the firm can apply technology to make processes more efficient. Because of the comprehensive nature of those goals, asset managers are advised to solicit the direct participation of senior management and include stakeholders from across the firm. One data professional said the team leading data harmonization at his firm includes the president of firm’s distribution company, the CTO, the CIO and the CIO of fixed income. The project is being directly run by a steering committee consisting of representatives from operations, distribution and IT. One of the first steps was to bring in an external consultant to assess the lay of the land and make recommendations. With that analysis in hand, the firm is now building the roadmap for the broader data harmonization effort.

Prudential’s Michael Herskovitz says hiring a chief data officer in 2020 was a critical step. It demonstrated that, although the “stove-pipes of data” that existed throughout the firm had been effective within their own discrete domains, the needs of the organization had outgrown that framework. The hiring of the CDO was a way to establish ownership, build formal governance, and establish data consistency across the firm. It was imperative to get this person into place quickly, because technology was evolving rapidly. “Independent business areas were picking tools for predictive analytics and machine learning,” he said. “People were moving in their own directions.” The appointment of the CDO helped shift firm’s strategy to the organizational level and toward a hub-and-spoke model in which analytic technologies were selected and housed centrally, and then adopted and applied by business units.

The cloud is helping asset managers leapfrog data and IT challenges. Many of the biggest obstacles to technology adoption and advancement are physical. The cloud removes most physical constraints by eliminating arduous tasks like building data centers, servers and storage. “Putting everything in the cloud has yielded significant efficiencies for us,” says one data professional. “It eliminated a lot of the redundant infrastructure and gave us much better control over what’s going on and what’s going out to remote users.”

One of the biggest benefits of the cloud is access to a new generation of tools, including ETL (extract, transform and load) applications, advanced predictive analytics, machine learning, and natural language processing. The cloud also offers flexibility—especially when it comes to software innovation. Ultimately, these technologies and applications will be the keys asset managers use to unlock the value of data, and the cloud is making them possible.

Cloud computing enables organizations to tap large numbers of virtual machines for computing power, as opposed to relying solely on machines housed in their own data centers. This scalability enables organizations to complete complex calculations in areas like risk analytics that once took weeks in the span of hours.

This speed buys the invaluable asset of time. Data professionals at asset management organizations always have their eyes on the clock. Every firm must start each business day with fresh data, validated and ready for the open. If that data isn’t ready, the firm lacks basic information on things like positions and pricing. But every 24 hours, data teams face the risk of some snag or error in the night cycle that delays the process. By increasing processing power and accelerating calculations, firms that move to the cloud are building more time into their night cycles and creating additional breathing room to deal with any data issues that might arise before the open.

Nevertheless, the buy side trails the sell side by a considerable margin when it comes to cloud adoption. Sell-side business models are based largely on calculations that enable them to manage positions and risk. Because those calculations involve such massive data sets, sell-side firms were quick to embrace the faster, cheaper cloud technology. Their early investments have allowed them to use the cloud to something much closer to its full potential. Of course, regulatory challenges have imposed obstacles, but conversations are being held with firms, regulators and service providers to encourage cloud adoption1.

One of the most important lessons buy-side firms can learn from the sell side is this: Don’t build your own cloud. When the sell side first started embracing the cloud, it still had serious concerns about security, privacy and control. As a result, some firms decided to build and operate their own proprietary clouds and data centers. Today, the industry is realizing that individual firms can’t compete with the scalability, security, reliability and constant updates of major cloud providers.

The ultimate payoff from data analytics will come from the combination of big data and artificial intelligence.

As recently as five years ago, few asset managers truly understood what AI was and what it could do, and even fewer were generating any real value from it. Today, asset managers need an effective AI strategy just to keep up with competitors. As Michael Campbell, CEO of industry consultant Cosmos Technology says, “AI is no longer an IT initiative, it’s a strategic initiative.”

Asset managers are accessing AI through third-party technology vendors and from portfolio management systems and other platforms, which are increasingly baking AI-driven analytics into their offerings. These tools are delivering benefits across three main areas:

Almost every professional interviewed for this report stressed that sound data management policy and infrastructure must precede any widespread use of AI. AI is of little use unless you have these foundational aspects in place, explains Vanguard’s Adam Brohm. “Having an AI platform comb through unwieldy data would just result in bad learning,” he says.

For this reason, the industry is being deliberate and cautious in its rollout of AI, making sure both the business case and the data infrastructure are sound before proceeding. Managers are being particularly careful when it comes to investments. While MLAI is already having a real impact at the operational level, many asset managers are taking it slow when it comes to investment data. Adam Brohm says that, with investments, many data professionals still see MLAI as the next step in the industry’s data evolution, one that will eventually be layered on top of the foundational work now underway.

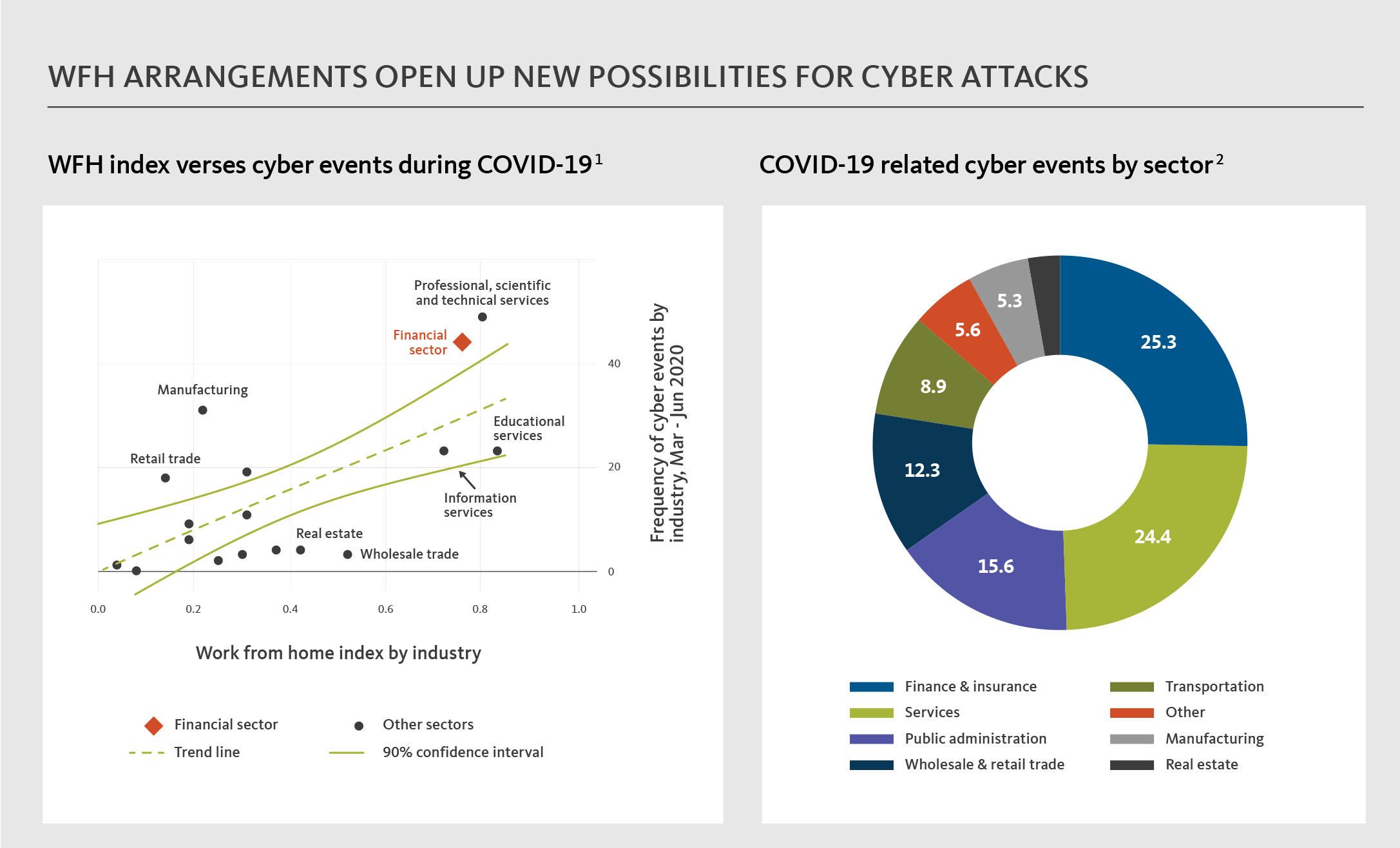

According to a 2021 report from The Financial Stability Board, COVID-19 triggered a wave of cyberattacks against financial service firms by individuals and groups looking to exploit security weaknesses and insider threats caused by the shift to work-from-home. According to the FSB report, the number of attacks including phishing, malware and ransomware increased from “just” 5,000 per week in February 2020 to more than 200,000 per week in April 2021.

“This is the new battlefield,” says Conor Allen, Head of Product at data security firm Manetu.

To keep up with the cybercriminals behind those attacks, asset managers and other financial service firms must make huge investments in new technology to protect their data. “The state of the art has moved on,” Allen says, adding that existing legacy technology platforms just don’t have the capabilities required for the advanced authentication, encryption and other techniques needed to protect financial service data from increasingly sophisticated attacks.

Because these emerging technologies are so expensive, asset managers must approach cybersecurity strategically. The first step in developing a data protection strategy that is both effective and economically practical is ranking data in terms of importance and risk. The next step is to determine the appropriate level of protection and resource requirement for each level of sensitivity.

Most organizations today use “role-based” controls for data authentication and access at varying levels of sensitivity and protection. But as attackers get better at penetrating these defenses, asset managers and other financial service firms will be forced to adopt more sophisticated “attribute-based” controls, which build on role-based security by adding in factors like the geographic location of the user, time of day, the user’s regular data consumption patterns, and even information like whether the user is working on a normal schedule or out of the office on holiday. Using these controls, anything out of the ordinary will send a red flag to the system and could block or limit access.

Financial service firms are also rolling out new tools to protect their most sensitive data in the event of a successful hack. Tokenization and other privacy-enhancing technologies, or PETS, allow firms to replace raw data like a client social security number with a placeholder, or token. Tokens can only be “resolved” and underlying data accessed by calling into a central data repository restricted to authenticated users and guarded by policy-based controls.

Even as firms work to build these protections, data security is being transformed by even more advanced encryption technology. In the near future, financial service data will be housed in what security professionals describe as the digital equivalent of thousands of safe deposit boxes within systems, all encrypted through powerful cryptography that makes it impossible to see what’s inside without going through the time-consuming process of trying to crack each encryption. “The goal is to protect the data by making it mathematically impossible to hack every safety deposit box,” says Nathaniel Rand, Head of Business Development at Manetu.

Even these advanced technologies represent just one element of data security. Companies may invest big in cybersecurity technology, but fall short on the policy and process. These vulnerabilities were exacerbated by the move to working from home, which brought more computers out of the office and into unsecured home environments. As one cybersecurity professional put it, even the best cybersecurity platforms can be undermined by “one kid to getting on a device and clicking on one malicious website.”

Someday soon, some asset managers will provide clients with exposure to cryptocurrencies as a regular part of their business. At the moment, however, data and IT professionals are working to make sure they are prepared to accommodate crypto on existing data platforms.

While some professionals worry that crypto’s 24/7 trading cycle poses real problems for existing data management systems, most experts think their platforms are flexible enough to handle the challenge. “Since our main job is to support trading and market technology, it’s essential for us to be on cutting edge of these devs and ensure our platforms are ready to support them,” says Broadridge’s Chris Fedele, adding that firms are already reviewing and working on the systems that would support crypto and blockchain.

Meanwhile, the industry continues experimenting with ways to utilize crypto’s underlying distributed ledger technology (DLT) in its own operations. Several asset managers have invested in “proof of concept” DLT initiatives. Despite the lack of breakthroughs to date, DLT remains an intriguing and promising technology that asset managers will continue to explore.

Most asset managers have gone all-in on the big data movement, with operations units, trading groups, investment teams, risk management departments, and sales and marketing divisions constantly on the lookout for new streams of data to mine for insights. But with new privacy rules, financial service firms’ massive data troves also represent a massive potential liability.

“Big data used to be all about volume, velocity, variety, veracity and value, the Five Vs of data. Today, you need to add an ‘S’ and a ‘P’ for security and privacy.”

Data experts say the adoption of the General Data Protection Regulation (GDPR) in Europe represented a tipping point for data privacy. With a lack of federal action in the United States leaving it up to individual states to enact their own privacy rules, many large asset managers are adopting strict GDPR compliance practices as their default, rather than contending with a series of differing requirements from state to state and country to country.

Enforcing these new rules across huge data holdings is a challenge. One issue cited by several data professionals is the EU’s “right to be forgotten.” For business, security and regulatory purposes, financial service computer networks must be constantly and fully backed up. But what happens when a client stops doing business with the firm, or when an individual asks that his data be purged from the system? At a more strategic level, managers are working to balance future requests for data removal against KYC, ALM and other requirements.

The consequences for violating privacy regulations can be severe. GDPR and privacy rules in California and other states fine companies per instance of violation. Even if those individual fines are small, financial service firms with hundreds of millions of clients are facing potentially huge risk exposures. And those costs don’t even take into account reputational damage from a data loss.

Asset managers are working to minimize privacy risk by using process automation to reduce the number of users with access to data. “In the end, end users don’t need access to that data—they just want the process improvements,” says one data professional.

But as privacy laws get stricter, asset managers and other financial service firms might have to start asking themselves how much data is actually necessary for their business. One data expert believes the industry is starting to bump up against the limits of data collection in a society that increasingly values and protects privacy. “Today you can gather all types of data—news, current events, weather,” he says. “But the question becomes, is it ultimately useful, or is it redundant?”

For asset managers, the turmoil caused by the COVID-19 crisis could produce at least one small silver lining: after the pandemic, managers that retain remote work options might have an easier time recruiting employees with the data skills needed for ambitious data analytics plans.

Financial service firms hoping to hire data scientists and cybersecurity experts have both advantages and disadvantages. In the positive camp, financial service firms pay well, and they give data and IT professionals the opportunity to work on extremely complex and important problems, often using state-of-the-art technology. “People will always want to work in this industry because it’s inherently interesting,” says Prudential’s Michael Herskovitz. On the opposite side of the ledger, financial services firms have not exactly been famous for prioritizing work-life balance.

“The COVID crisis changed everyone’s mindset,” says Cosmos Technology’s Michael Campbell, who contends that asset managers have a real opportunity to use lessons from the pandemic to attract more young data and technology professionals to the industry. By retaining flexible and remote work models created during the work-from-home era, they can tap into a broader talent pool across the country and around the world, while also increasing the appeal of asset management jobs. Remote work options could also save firms money by allowing asset managers to hire outside high-wage talent centers like New York, Boston and San Francisco.

The asset management data journey is just beginning. In time, data analytics will influence, and possibly revolutionize, almost every aspect of the business. The only limit on that potential is the ability of managers to control the quality, flow and security of data streams that will fuel ever-more powerful artificial intelligence applications. However, because data is proliferating at such a rapid rate, and because data exists in so many nooks and corners across organizations, achieving this level of control is no easy task. In fact, building the infrastructure that will provide that control has become the primary objective for asset management data and IT teams today. As the industry emerges from the worst days of the global pandemic, many firms are undertaking sweeping initiatives to establish the data governance and management policies – from the Single Source of Truth to MLAI, cybersecurity and beyond – that will serve as cornerstones of their future data infrastructures. Once those sturdy foundations are in place, asset managers’ data journeys will begin to accelerate, with new data feeding new AI tools that unlock invaluable insights and generate potentially exponential gains in operational efficiency and business performance.

Our representatives and specialists are ready with the solutions you need to advance your business.

Want to speak with a sales representative?

| Table Heading | |

|---|---|

| +1 800 353 0103 | North America |

| +442075513000 | EMEA |

| +65 6438 1144 | APAC |

Your sales rep submission has been received. One of our sales representatives will contact you soon.

Want to speak with a sales representative?

| Table Heading | |

|---|---|

| +1 800 353 0103 | North America |

| +442075513000 | EMEA |

| +65 6438 1144 | APAC |